| MATHESIS | NEURAL AGE | BACK TO INIS |

| |

Elements of Learning and Intelligence |

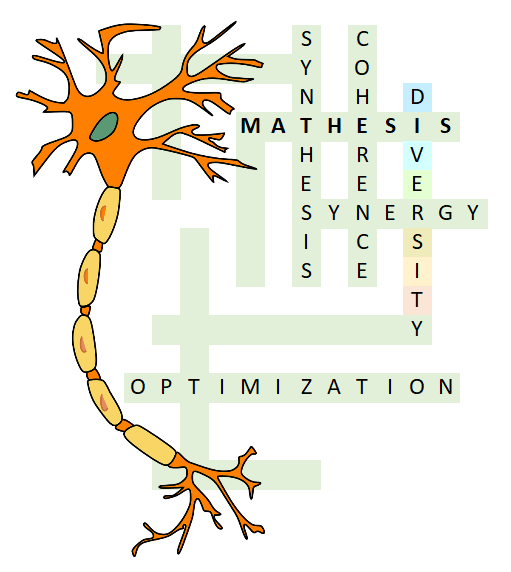

Mathesis (Hellenic Μάθησις, “learning”) is an interdisciplinary theory for learning and intelligence that combines biology, neuroscience, computer science, engineering and various branches of mathematics. It is largely a formal theory that attempts to unify all learning under a common framework, deriving its axiomatic basis and approach from nature and biology. It is also a theory that demonstrates the strength of mathematics, the imperative of biology in learning, and the potency of interdisciplinarity over complexity. The overall effort, which has spanned more than three decades, arguably demonstrates the power of perseverance, too.

Mathesis will be published in three volumes. The first volume is available below. A brief animation with principles and concepts from the theory appears in the video on the right.

Volume I: Synthesis and Coherence

| for all things which have multiple parts, and which are not merely a pile but a whole beyond the parts, there is a cause |

| πάντων γὰρ ὅσα πλείω μέρη ἔχει καὶ μή ἐστιν οἷον σωρὸς τὸ πᾶν ἀλλ᾿ ἔστι τι τὸ ὅλον παρὰ τὰ μόρια, ἔστι τι αἴτιον |

| -- Aristotle, Metaphysics, 8.6, 1045a, c. 360 BCE. |

The first volume of the theory provides an introduction to learning, discusses its connection with biology, and defines synthesis and coherence as the first foundational principles of learning systems. It introduces a synergy algebra and the synergy conjecture as

essential elements of synthesis. It also defines operations that manipulate coherence, demonstrates their relationship with recursion, and uses them to define coherent learning and some elementary coherent neural structures. As a preliminary evaluation of the theory, it applies some of those elements to optical character recognition and derives a neural system that dramatically improves the state of the art. In addition, the first volume introduces the split notation as a method to reduce notational complexity and proposes the N programming language.

Synthesis and coherence are the two main topics of the first volume. They represent processes that create complexity from simplicity and manage the complexity.

Synthesis derives from biology and cell theory. It implies structure, synergy, and state. It thrives in diversity and assumes a greater partition of interactions into detachment, synergy, and mimesis. Neural networks and structures are recursively constructed as synergetic aggregations of other such networks or structures. A neural algebra is introduced to describe the process formally. Examples are shown for generative and classification networks.

Coherence provides a theoretical foundation for learning, underlies and unifies various learning phenomena, and enables the creation of more sophisticated learning systems. A large part of Mathesis is a transition from the current empirical state-of-the-art into coherent entities -- such as coherent functionality, coherent learning calculi, coherent structure, coherent plasticity and growth, and coherent evolution. Coherence also marks the beginning of a transition to an evolutionary process and approach that is assumed to be necessary for intelligence.

Acknowledgments

Mathesis has been directly influenced by the collective work of David Hubel and Torsten Wiesel, David Rumelhart and James McClelland, Jürgen Schmidhuber, David G. Stork, the Tablet PC group of Microsoft, and more recently Peter Sterling and Simon Laughlin.

In approximate chronological order, the following people have also influenced the theory in a different and more indirect way through teachings and mentoring, discussions and advice, collaboration, support, and mere chance: Theodore Tomaras, Manolis Katevenis, John Hennessy, Nils Nilsson, Barbara Hayes-Roth, Marc Levoy, George John, Ahmad Abdulkader, Gordon Rios, Heather Alden, Japjit Tulsi, Sanjeev Katariya, and Balamurali Meduri. Gordon Rios and Sanjeev Katariya reviewed the manuscript of the first volume. Heather Alden also reviewed the last chapter.

It is sad to recall that D. Rumelhart, N. Nillson, T. Tomaras, and A. Abdulkader passed away during the long development of this theory. The world is less without their kindness and wisdom.

Finally, many thanks to eBay for providing a great work environment as well as hardware and resources to implement and evaluate some parts of the theory. A completely new, redesigned, and more extensive implementation is currently developed on our own hardware using Pytorch. A limited version was implemented in Tensorflow and a number of experiments were performed on our own hardware as well as on Google Colaboratory. Gordon Rios ran some of the experiments on his own computer and Google Colaboratory, too.

Links

- Mathesis facebook page

- Source code (on github) for the MNIST squeeze-and-excitation flower net (SEF) ensemble